You need more than p-values

Since the first time I ran an A/B test, something has felt off. For years, I’ve been trying to put my finger on what exactly bothered me about the process but failed to do so. Today, I’ve finally put it into words. Let me be clear: I don’t think A/B testing is inherently bad. When used correctly, it can be a very useful tool. However, I do have concerns about relying solely on mathematical calculations to make important business decisions. I believe that business decisions should be based on business considerations, not just math. Don’t let your business be run by $\chi^2$ tests.

What’s an A/B test?

Before start dissing A/B testing, I’ll briefly summarize what is an A/B test 1. It basically consists on four steps

- You propose a hypothesis, e.g., if we change the banner color from green to red we’ll get more sales.

- You split your population into two groups. One of the groups will see the green banner (variant A) and the other group will see the red banner (variant B). You wait for some days (you need to compute beforehand how much time you have to wait).

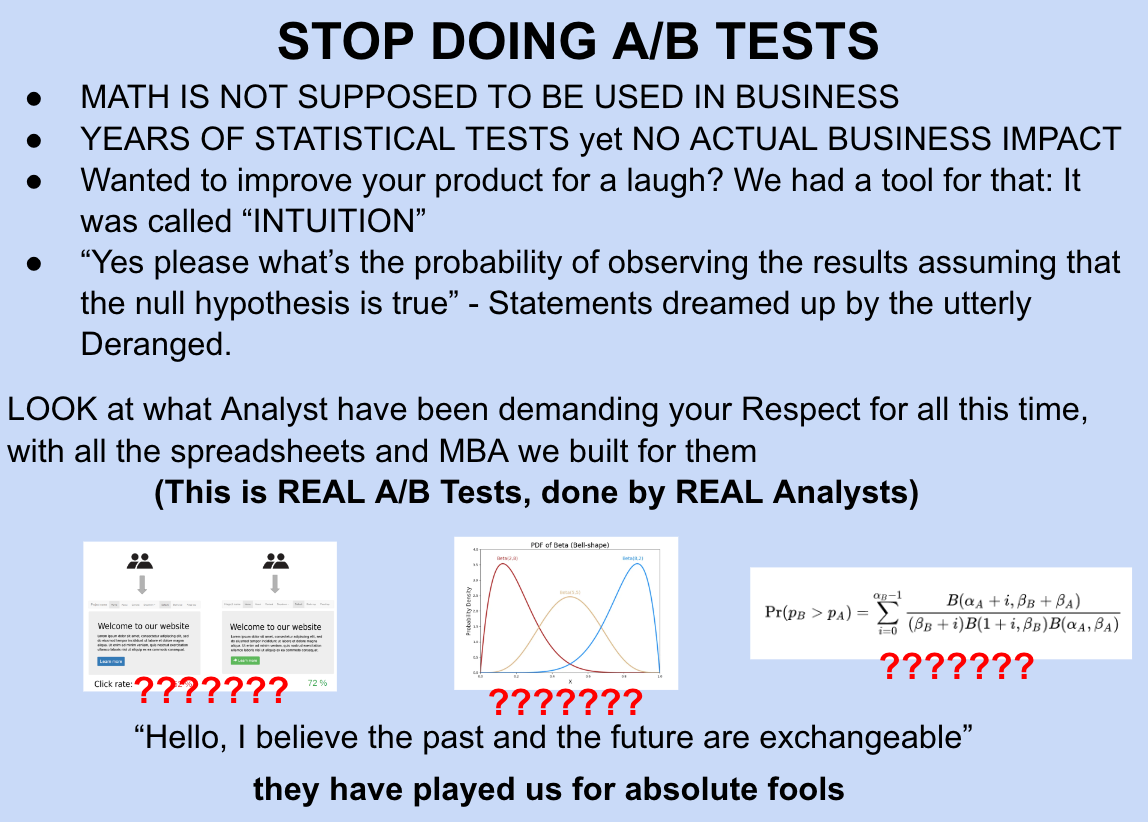

- You analyze the data you have collected during the experiment. Usually, this means computing the uplift that the new variant had with respect to the old variant, and its statistical significance, this is, you compute a $\chi^2$ test if you’re frequentist, or you compute $P(p_A < p_B)$ if you’re Bayesian.

- If the results of the analysis are good enough (typically this means $p<0.05$), you declare the test a success and deploy the best variant to all the users.

This approach has been used and reused thousands of times in thousands of companies since the awakening of the digital world. It’s a standard approach, and if you do it right you have solid math that tells you if the difference was significant. Everyone is using it and a lot of money has been generated by following this approach. Then why on earth am I writing a post against A/B testing?

Even if everything goes right…

Running an A/B test is non-trivial. There are a lot of things that can go wrong. Here’s a non-exhaustive list of things that can go wrong when running an A/B test.

- You can have false positives, i.e. you think results were positive when they were negative.

- You can get non-significant results, i.e. you can’t say if variant B was better than variant A.

- Your boss can ask you to stop the test before getting the sample size because they saw in a dashboard that the results were already significant (peeking on running A/B tests is a bad idea.

- You can have tracking bugs that pollute your data.

- You can have bugs that mix users from variant A to variant B.

- You can use the wrong statistical test and report meaningless results.

- You can have a bug in the correct statistical test and still report meaningless results.

- And a lot of things more.

As you can see, running an A/B test is not an easy task. Therefore, when we complete a test, and we get our significant results back, we are thrilled. We share the good news with the entire company through a message on Slack to celebrate our success, the corresponding PM accepts the new feature, the frontend team rolls out the best variant to all the users, and everyone is happy.

But… Even when everything goes right during the A/B test, there’s a sneaky problem that often goes unnoticed. Sure, you’ve followed all the rules, used the right math, and analyzed your data like a pro. But there’s a catch 2.

At the beginning of the post, we defined an A/B test as having 4 steps: (1) hypothesis generation, (2) split population, (3) data analysis and decision making, and (4) deploy - or not - the changes. The issue I want to talk about lies in the third step, i.e. how is the data analyzed and decisions made.

Almost always, analyzing the data of an A/B test implies running some kind of statistical test. For example, if you are interested in the click-through rate you’ll use a $\chi^2$-test. With this test, you want to discern if the observed difference between the variants is statistically significant or not. If you observe a significant difference, then you know that the difference you have measured is not due to change, so you can declare a winner variant and deploy it. But in this line of reasoning, there’s a big assumption that is almost never challenged. When you observe a difference in your test and decide to deploy the best variant is because you believe that the past and the future are going to behave the same. And this is a big assumption, which is known as the exchangeability assumption.

Challenging the exchangeability assumption

The math will tell you if there’s a significant difference in the data you saw during the test. Sounds good, right? But here’s the problem - you have to assume that what you saw in the test is how things will be in the future. And let’s be real, that’s a wild assumption. In the business world, things change fast. Very fast. What worked last quarter might flop this month. These statistical tests can’t tell you anything about how your business is going to behave in the following months. Here are a few examples on what can change

- Users follow trends during the year, and they don’t behave the same in summer as in winter.

- Users get used to the new stuff, and the excitement wears off.

- Your competition does something new, messing with how users see you.

- Big global changes mess with everything too.

- Public perceptions evolve over time, shaping how your products are perceived.

And this is just a quick list of examples. If you start thinking about it, you’ll notice that the assumption of exchangeability is wild, it almost seems that the opposite is true: the past and the future are always different.

The big problem here is that people use hard math to feel safe. Since you’ve used rigorous math to derive your p-value you are convinced that the results you get are as solid as the math behind them. But that’s not enough. As Ben Recht said in a recent post

First and foremost, all of the beautiful rigor relies on the assumption that past data is exchangeable with the future. We are drawing logical conclusions under a fanciful, unverifiable hypothesis. I can also derive rigorous theorems about mechanics, assuming the Earth is flat and the center of the universe. There are diminishing returns of rigor when the foundational assumptions are untestable and implausible.

How to do it?

You might ask now “ok Alex, it’s clear you have some problems with A/B tests, but then how would you do it?”. I see two solutions to this problem, the first is to prove the exchangeability assumption and the second is don’t only use statistical tests. Let me explain them in depth.

Prove your exchangeability assumption

As I explained before, trusting your p-values to make future decisions implies a big assumption. It means that you believe the past and the future are exchangeable, this is, you believe the data you saw in the past came from the same distribution as the data you’ll see in the future. Since this is a hard assumption you should try to validate it before relying on it. The usual approach to validate this hypothesis is to use holdback groups. According to Spotify, using holdback groups is the practice of exempting a set of users from experiments and new features, to see long-term effects and combined evaluation. With this approach, you can check that your assumption is correct.

However, this approach is expensive, and it requires you to continuously worsen the experience of some users, and this is something that can’t always be done. On the other hand, some of the decisions you need to make aren’t testable. For example, if you’re the CEO of a logistics company, and you think it’s a good idea to use drones to deliver things, then doing a fancy AB test to figure out if you should use drones or not just doesn’t add up. Why? Because using drones is a big decision that shapes your whole business! You don’t want to test it out like a science experiment. You just want to say, “Hey, this is my awesome delivery style, and I’m sticking with it!”. It’s all about making your product awesome, just the way you want it. Can you show me the A/B test Jeff Bezos ran before founding Amazon?

Stop only using p-values to make decisions

As we saw in the last section, it’s not easy to check the exchangeability hypothesis, so what can we do then? My proposal is then to not only rely on the p-values to make business decisions. In particular, I believe a good businessperson should take into account the statistical results of the test just as a small part of all the information needed to make a business decision. If you ask me, a good executive should have a strong intuition that drives the development of new features and are usually right. As Jeff Bezos said

The thing I have noticed is when the anecdotes and the data disagree, the anecdotes are usually right. There’s something wrong with the way you are measuring it.

Two attitudes in this regard particularly bug me. The first is executives blindly trusting the p-values that the data team calculates for them. Executives get paid know a lot about the business and and maneuver it in situations of uncertainty. They get paid to make difficult decisions. They should anticipate the future, learn about the customers, understand the product, and make decisions that benefit users, and ultimately the company. All of these things can’t be accomplished just by looking at a single number. For example, a good executive should be able to forecast the economic situation in the following months and how this will impact the product, or anticipate the next movement the competition will do and how the company can minimize the impact. So please, don’t let your business be run by chi-squared tests or Bayesian simulations. If you are an executive you have to embrace the responsibility that comes with the position and make the necessary decisions.

The other situation that bothers me is when data scientists get upset with executives for not blindly trusting their p-values. Or when the manager asks to stop the test early and use partial data to make a decision. From my perspective, these data scientists are too obsessed with statistical significance and have forgotten the big picture. The statistical test is just a small piece of the decision-making process. Also, as we saw, even if the results of the statistical test are positive they can’t be assumed to hold in the future. So if you’re a data scientist, do yourself a favor take your nose out of your math book, and try to learn how the real world works. Mathematical rigor is useless when assumptions do not hold.

In my opinion, these two attitudes came from focusing too much in the technique and forgetting about the situation. A/B tests and randomized experiments are great tools that have been applied with success in multiple areas. For example, in drug discovery and drug testing, they work marvels. This is because the exchangeability assumption usually holds, i.e.: if a drug helps to mitigate migraines, it’ll continue to help in the future (of course you can develop resistance to a drug, but this is something that medical researchers take into account). So, driven by the success of this technique in specific situations, some people believe that it’s the only option available to evaluate business decisions.

So what’s my solution then? Compute the p-values and any other statistic you want with your data. But also talk to users, learn about the business, understand the product, and basically build an intuition. And then, with all this information, make the decision you think it’s the best one.

Conclusions

-

If you want some good shit about A/B testing I really recommend you Evan Miller’s blog, specially the A/B testing articles. ↩